Disclaimer All information is offered in good faith and in the hope that it may be of use for educational purpose and for Database community purpose, but is not guaranteed to be correct, up to date or suitable for any particular purpose. db.geeksinsight.com accepts no liability in respect of this information or its use.

This site is independent of and does not represent Oracle Corporation in any way. Oracle does not officially sponsor, approve, or endorse this site or its content and if notify any such I am happy to remove.

Product and company names mentioned in this website may be the trademarks of their respective owners and published here for informational purpose only.

This is my personal blog. The views expressed on these pages are mine and learnt from other blogs and bloggers and to enhance and support the DBA community and this web blog does not represent the thoughts, intentions, plans or strategies of my current employer nor the Oracle and its affiliates or any other companies. And this website does not offer or take profit for providing these content and this is purely non-profit and for educational purpose only.

If you see any issues with Content and copy write issues, I am happy to remove if you notify me.

Contact Geek DBA Team, via geeksinsights@gmail.com

|

Very old thing, but to just refresh, Ever wonder how the extent sizes is managed if your tablespace is in LMT and autoallocate as like below.

CREATE TABLESPACE large_tables

DATAFILE ‘/u01/oracle/data/DB1/large_tables_01.dbf’

SIZE 100M EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

When you specify auto allocate, Oracle will allocate extent sizes of 64KB, 1MB, 8MB, 32MB or 64MB. and even 256MB (according to Jonathan in his very old post)

The first sixteen extents will all be 64KB in size.

When the seventeenth extent is allocated, Oracle decides that it had the wrong extent size and allocates a larger, 1MB extent.

After sixteen 1MB extents have been allocated, future extents will be 8MB in size.

In short, (but this depends on the space available too)

IF dba_segment.bytes < 1M THEN

next extent = 64K

ElSIF 1M <= dba_segment.bytes < 64M THEN

next extent = 1M

ELSIF 64M <= dba_segment.bytes < 1G

THEN next extent = 8M

ELSE

next extent = 64MB,

Further , Each BMB controls up to:

=> 16 blocks until the segment is 1Mb

=> 64 blocks until the segment is 32Mb

=> 256 blocks until the segment is 1Gb

=> 1024 blocks once segment is >1Gb

Very good article on LMT vs DMT: Download here

A brief history about Oracle Flashback , its starting version, various enhancements, What it uses to manage the flashback.

| Version |

Flashback Option available |

Provides |

Uses |

Depends on |

| 9i |

Flashback Query |

allows the user to query the data at some point-in-time in the past to reconstruct the lost data that may have been deleted or changed by accident |

Undo |

undo_retention |

| 10g |

Flashback Version Query |

provides a mechanism to view changes made to the database over the time at row level |

undo |

undo_retention |

| 10g |

Flashback Transaction Query |

provides a mechanism to view changes made to the database at the transaction level , dba_transaction_query you can view the versions of statements for that transactions and revert to particular statement |

undo |

undo_retention |

| 10g |

Flashback Table |

provides the ability to recover a table, a set of tables to the specific point in time |

undo |

undo_retention |

| 10g |

Flashback Drop |

provides a safety net when dropping objects as you can very quickly and easily undo the drop |

recyclebin |

recyclebin on |

| 10g |

Flashback database |

is a new strategy for doing point-in-time recovery. It quickly rewinds an Oracle database to a previous time to correct any problems cause by logical data corruption or by human mistakes |

flashbacklogs

and

uses rvwr bg process |

flashback area |

| 10g |

Flashback Standby Database |

This flashback feature improves the switchover and failover time of a standby database. You no longer need to specify a log apply delay, because you can now roll back the standby database if an error occurs on the primary and is propagated to the standby. Useful when there is a logical corruption to avoid same propagated to standby |

flashback logs/archives |

delay option with automatic recovery

recover managed standby database delay 60 disconnect; |

| 10g |

Flashback Reinstantiation |

This flashback feature reduces the need to instantiate the old primary database following a failover. This in turn lets you restore full resiliency after a failure more quickly. You can do this by using the SQL statement FLASHBACK DATABASE to roll back the primary database in time to synchronize with the standby database. |

select standby_became

_primary_scn from v$database; |

flashback old primary to the scn came from standby (current primary) |

| 11gR1 |

Flashback Data Archive

(Total Recall) |

provides support for querying the history data using the familiar flashback feature (flash back query) with sql construct clause “AS of”, beyond the undo retention or flash back archive limit |

A separate flashback archive tablespace and uses fdba bg process |

object clause Retention

alter table employees flashback archive <ts>; |

Hope this helps, this information is gathered from different sources and updated here in, if any of above seems inaccurate please update me , will correct the same.

-Thanks

Geek DBA

We all know that when a query/statement is running from long time (actually this post is meant to know at what times :)) , the access path will be shown in v$session_longops

Some of the access paths are as below

- Table scan;

- Index Fast Full Scan;

- Hash join;

- Sort/Merge;

- Sort Output;

- Rollback;

- Gather Table's Index Statistics.

Before going to each of them, we should need to know about some important columns in v$session_longops,

Time_Remaining

Elapsed_seconds

Total_work

So_FAR

So Time_Remaining = ElapsedSeconds * (TotalWork-Sofar)/ Sofar

Note:- SOFAR and Total work are blocks not in the seconds.

So now, at what thresholds (can be time or certain number of blocks) for each of above access paths. The wide known answer is 6 seconds

TableScan:-

The common and widely known criteria to appear a full tablescan in v$session_longops is 6 seconds, but there is another threshold also but for count of blocks which is 10000 blocks. if the table has less than 10000 blocks but table scan runs more than 6 seconds that’s not enough.

Index Fast Full Scan:-

The criteria for this access path is 1000 index blocks i.e 10 times lesser than tablescan criteria. then only this access path will appear.

Hash Join:-

Hash joins are usually used joining large data sets. The performance of hash joins strongly depends on available memory either allocated by pga_aggregate_target (if using workarea_size_policy = auto) or hash_area_size (if using workarea_size_policy = manual). Block is the unit to measure hash join work in v$session_longops.

It depends, but most of the cases 6 seconds timing is the criteria but it depends on largely the sort and work area size due to usage of PGA memory for hashing. But even smaller size of blocks usually appear (say for example 20 blocks etc) will get appear in v$session_longops.

Further, Oracle consider the long operations as linear operations, so the estimate time may not accurate if its the operation is not real.

-Thanks

Geek DBA

How to measure the GES Resources (enqueues for RAC ) limit in RAC?

Oracle preserves the integrity of the users data during simultaneous use of the same data blocks by different instances using GCS. In addition to data blocks, many other shared resources can be concurrently accessed by end users. Oracle uses a queuing mechanism to ensure proper use of these shared resources. In an RAC environment , Global Enqueue services (GES) protects and regulate the access to these shared resources.

In typical single instance database, when you updating a controlfile (CF enqueue), of individual row (TX enqueue), or of an exclusive lock on a table (TM enqueue) will be created. But in RAC environment as these resources (any of above) are shared these should be managed globally. GES protects and managing this enqueues with proper queueing mechanism. So typically enqueues are kind of locks, but here in RAC they were at SGA , i.e in memory structures.

We can keep dml_locks for an row based enqueue , but how about this GES enqueues.

Oracle will caculate the following while RAC instance startup for GES Resources:-

GES Resources= DB_FILES+DML_LOCKS+ENQUEUE_RESOURCES+PROCESSES+TRANSACTIONS+200) * 1 (1+(N-1) / N)

Where N is the number of instances.

You can see the GES_RESOURCES in v$resource_limit view for the maximum and current_utilization.

RAC Wait Event Naming Conventions

Events that start with “GCS%” and “gc%” are Cache Fusion-related waits. In other word, they have to do with waits experienced in shipping current or consistent-read versions of blocks across instances in a RAC cluster

Events that start with “ges%’ are related to Global Enqueue Services

Block-Oriented RAC Waits

“gc current block 2-way” - Indicates that a current block was sent from either the resource master requiring 1 message and 1 transfer

“gc current block 3-way” – Indicates that a current block was forwarded to a 3rd node from which it was sent, requiring 2 messages and one block transfer.

Continue reading Oracle RAC Wait events

I raised a SR that am not able to see the gc buffer busy wait event as expected and eventually got to know that it is no more.

Before proceeding, what is gc buffer busy? Event ‘gc buffer busy’ event means that a session is trying to access a buffer,but there is an open request for Global cache lock for that block already, and so, the session must wait for the GC lock request to complete before proceeding. This wait is instrumented as ‘gc buffer busy’ event.

But in 11g you cannot see this event any more, 11g onwards, this wait event is split in to ‘gc buffer busy acquire’ and ‘gc buffer busy release’.

gc buffer busy acquire, is an event waiting for an access to a block/buffer in local instance, where some other session is in same local instance has already had a place holder on it (btw, gc cur/cr request are placeholders (enqueue)) in this case may be an instance 1.

‘gc buffer busy release’. is an event recorded when the remote instance is waiting for an access to the same block/buffer but from remote instance (in this case instance 2).

For example,

1) A block 55060 is placed in a Instance 1 buffer with gc current request by a session SID 30, a wait event recorded for this “gc current request”

2) The same block 55060, is need by session SID 38 in Instance 1 itself to acquire gc lock, a wait event recorded in instance 1 will be “gc buffer busy acquire”

3) And the same block/buffer 55060, is also need by session SID 56 in instance 2 to acquire gc lock for some modifications, now a event will be recorded in instance 2 will “gc buffer busy release”

Earlier to 11g the above 2,3 recorded as gc buffer busy only.

Look at the output.

INST_ID SID EVENT USERNAME STATE Text

------- ------ ----------------------- ---------- -------- -------------------------------

1 30 gc current request SYS WAITING 2 file# 5-block# 56050 id-1939303

1 38 gc buffer busy acquire SYS WAITING file# 10-block# 56050-class# 1

2 56 gc buffer busy release SYS WAITING file# 10-block# 56050-class# 1

-Thanks

Geek DBA

Oracle 10g provides the Automatic Worload Repository. The AWR is a repository of performance information collected by the database to aid in the tuning process for DBAs. The main focus for the Oracle database in version 10g is self-manageability. AWR is the place where the data to aid in self-management is stored.

WHAT DOES AWR DO?

Historically people used bstat and estat to collect Oracle statistics over a time period and then compare them to each other. The bstat/estat approach was replaced with statspack available in Oracle 8i. Statspack was a package provided by Oracle that did roughly the same thing but better. Statspack has now been surpassed in functionality by AWR which is always collecting execution statistics for future analysis and tuning performed by all of the expert components provided by Oracle. Oracle recommends that all statspack users switch to AWR in 10g.

Continue reading Quiz Post #10: What are the meanings for different letter for awr base tables WR#

Imagine how it could be if you can use your image copies of your databases as your UAT/dev databases, without much usign complex restore procedure, how it could be if you refresh/clone the databases.

The answer is is using Oracle Direct NFS Client and dbms_dnfs package.

Clonedb is a new Direct NFS (DNFS) feature introduced in the 11.2.0.2 database patchset. Rather than using the traditional RMAN database duplication, clonedb uses dNFS technology to instantly fire up a clone using an existing backup of a database as the data store. The clone uses copy-on-write technology, so only changed blocks need to be stored locally, while the unchanged data is referenced directly from the backup files. Continue reading Clone Database: Turn your backup (rman image copy) to a database without copy/restore?

Hello,

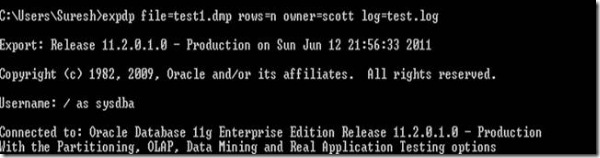

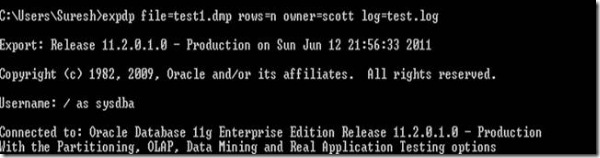

Am trying to use expdp, Watch this screenshot closely

and you ever wonder that how does it works, as I have used , row=n, file=test1.dmp with expdp which should not work but it is. Continue reading Data Pump: Expdp/impdp legacy support.

Hello All,

We all build RAC infrastructure and we have seen many cases that environment is not stable after live or when start using it.

The reasons may be many, but due to vast technologies involvement in high availability concepts we do not know or we ignore many aspects while doing environment testing.

It is indeed very important, We need to do end to end test cases with regards to failovers as the high availability infrastructure is meant for that. Zero failure tolerance, or Connection failover or what ever the case this test cases should be structured, well organized so that our high availability solution to business critical systems should be fool proof.

Have collected the RAC failover test cases from various sources and modified a bit , attached here in with categorical wise which may be useful to you all when some one ask you about to do testing of RAC new environment.

This documents contains,

Category of testing

Action & Target we are testing

Steps to carry out testing

Expected result of testing

Test result to record

| Category |

Test Cases |

Document |

Remarks |

Clusterware

Testing |

Private Network Cable FailuresPublic Network Cable Failures

RAC Host Failures

Oracle HA Features

Vendor HA Features

11gR2 New Features testing (OCR/Voting/HAIP etc) |

Download here |

|

| Storage |

RAC Host-to-Storage Cable FailuresRAC Host-to-Storage NIC/HBA Failures

Storage Rack/Enclosure Failures

Oracle File Corruption or I/O Fencing Scenarios

NAS/SAN Storage Administrative Tasks

|

Download here |

|

| Stress |

Run heavy database load |

Download here |

|

| ASM |

Kill ASM InstanceFail ASM Disks

Reboot node while adding one ASM Disk

Destroy One failgroup while in normal redundancy |

Download here |

|

| ACFS |

Kill VDBG ProcessPhysically fail one of the ASM failure diskgroups

Resize ACFS disks by acfsutil |

Download here |

|

-Thanks

Geek DBA

|

Follow Me!!!