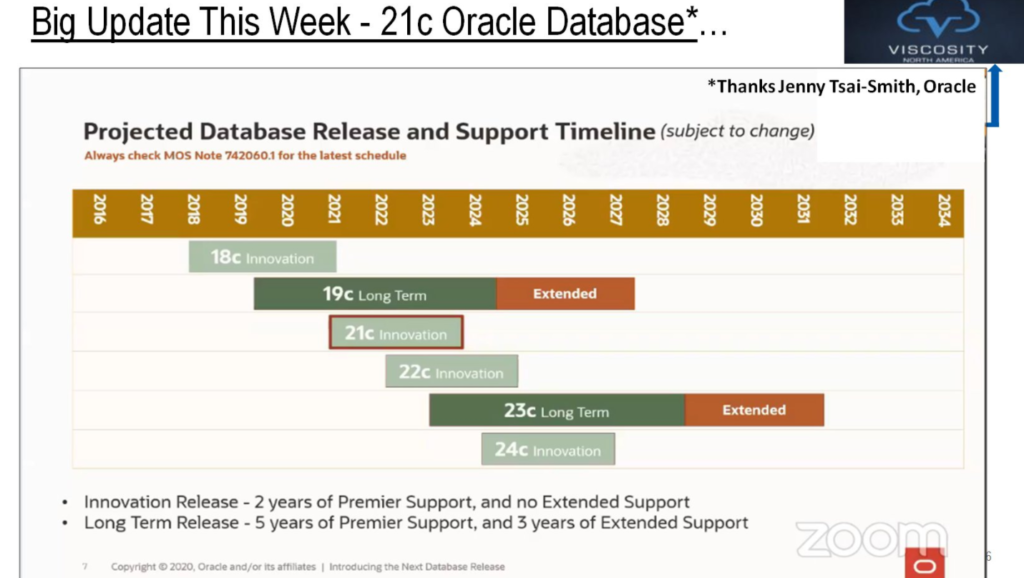

From various blogs and posts, here is the summary of New features in upcoming release of Oracle database 23c.

OLTP and Core DB:

Accelerate SecureFiles LOB Write Performance

Automatic SecureFiles Shrink

Automatic Transaction Abort

Escrow Column Concurrency Control

Fast Ingest (Memoptimize for Write) Enhancements

Increased Column Limit to 4k

Managing Flashback Database Logs Outside the Fast Recovery Area

Remove One-Touch Restrictions after Parallel DML

Annotations – Define Metadata for Database Objects

SELECT Without the FROM Clause

Usage of Column Alias in GROUP BY and HAVING

Table Value Constructor – Group Multiple Rows of Data in a Single DML or SELECT statement

Better Error Messages to Explain why a Statement Failed to Execute

New Developer Role: dbms_developer_admin.grant_privs(‘JULIAN’);

Schema Level Privileges

RUR’s are transitioning to MRPs (available on Linux x86-64)

Application Development:

Aggregation over INTERVAL Data Types

Asynchronous Programming

Blockchain Table Enhancements

DEFAULT ON NULL for UPDATE Statements

Direct Joins for UPDATE and DELETE Statements

GROUP BY Column Alias or Position

Introduction to Javascript Modules and MLE Environments MLE – Module Calls

New Database Role for Application Developers

OJVM Web Services Callout Enhancement

OJVM Allow HTTP and TCP Access While Disabling Other OS Calls

Oracle Text Indexes with Automatic Maintenance

Sagas for Microservices

SQL Domains

SQL Support for Boolean Datatype

SQL UPDATE RETURN Clause Enhancements

Table Value Constructor

Transparent Application Continuity

Transportable Binary XML

Ubiquitous Search With DBMS_SEARCH Packages

Unicode IVS (Ideographic Variation Sequence) Support

Compression:

Improve Performance and Disk Utilization for Hybrid Columnar Compression

Index-Organized Tables (IOTs) Advanced Low Compression

Data Guard:

Per-PDB Data Guard Integration Enhancements

Event Processing:

Advanced Queuing and Transactional Event Queues Enhancements

OKafka (Oracle’s Kafka implementation)

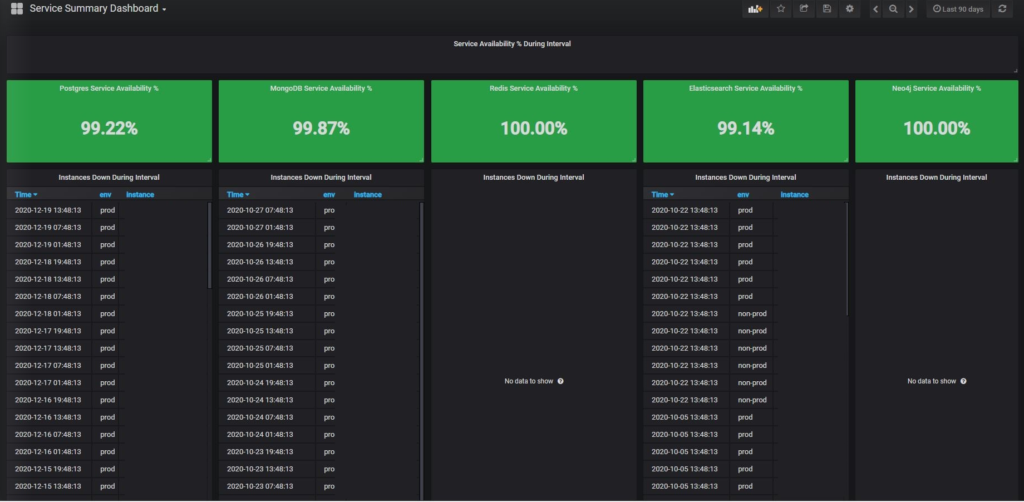

Prometheus/Grafana Observability for Oracle Database

In-Memory:

Automatic In-Memory enhancements for improving column store performance

Java:

JDBC Enhancements to Transparent Application Continuity

JDBC Support for Native BOOLEAN Datatype

JDBC Support for OAuth2.0 for DB Authentication and Azure AD Integration

JDBC Support for Radius Enhancements (Challenge Response Mode a.k.a. Two Factor Authentication)

JDBC Support for Self-Driven Diagnosability

JDBC-Thin support for longer passwords

UCP Asynchronous Extension

JSON:

JSON-Relational Duality View

JSON SCHEMA

RAC:

Local Rolling Patching

Oracle RAC on Kubernetes

Sequence Optimizations in Oracle RAC

Simplified Database Deployment

Single-Server Rolling Patching

Smart Connection Rebalance

Security:

Ability to Audit Object Actions at the Column Level for Tables and Views

Enhancements to RADIUS Configuration

Increased Oracle Database Password Length: 1024 Byte Password

Schema Privileges to Simplify Access Control

TLS 1.3

Sharding:

JDBC Support for Split Partition Set and Directory based Sharding

New Directory-Based Sharding Method

RAFT Replication

UCP Support for XA Transactions with Oracle Database Sharding

Spatial and Graph:

Native Representation of Graphs in Oracle Database

Spatial: 3D Models and Analytics

Spatial: Spatial Studio UI Support for Point Cloud Features

Support for the ISO/IEC SQL Property Graph Queries (SQL/PGQ) Standard

Use JSON Collections as a Graph Data Source

Source: Lucas Jellema, renenyffenegger, threadreaderapp, phsalvisberg, juliandontcheff

Follow Me!!!