In Previous post we saw, how to install MongoDB and Create a standalone database.

In this post we will see how to achieve high availability in MongoDB by using replicasets. In MongoDB the high availability is acheived by using replicaset as like Standby in Oracle. They are called as Primary and Secondary Replicaset. Whenever there is DML on primary it is populated to secondaries and there is voting mechanism between these replicaset to maintain the primary status. If you are having only two nodes for your replicaset you will need to setup an arbiter for voting process.

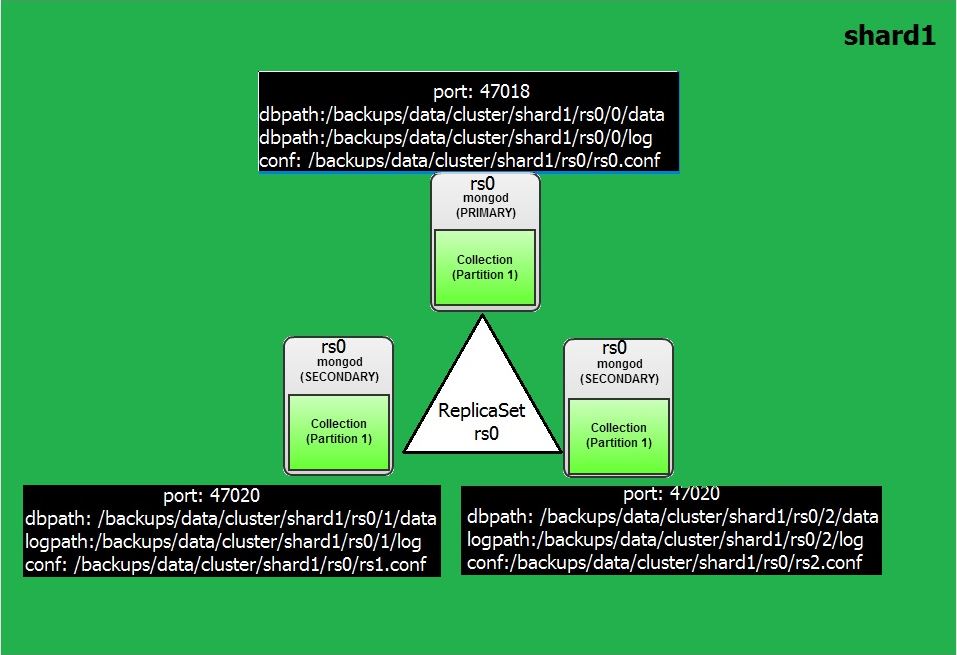

In order to acheive this, I will create a replicaset in the single host for our learning purpose. For this we need a different directories to hold the data/log files and different port number for each instance and start them. Here is the directory structure looks like for a Primary with two Secondary replicaset and also note the port numbers which we will configure in configuration files.

| Parameter | Primary | Secondary 1 | Secondary 2 |

| Base Directory | /backups/data/cluster/shard1/ | /backups/data/cluster/shard1/ | /backups/data/cluster/shard1/ |

| ReplicaSetDirectory | /backups/data/cluster/shard1/rs0 | /backups/data/cluster/shard1/rs0 | /backups/data/cluster/shard1/rs0 |

| Node Identifcation | /backups/data/cluster/shard1/rs0/0 | /backups/data/cluster/shard1/rs0/1 | /backups/data/cluster/shard1/rs0/2 |

| Port Number | 47018 | 47019 | 47020 |

| Config File Location | /backups/data/cluster/shard1/rs0.conf | /backups/data/cluster/shard1/rs1.conf | /backups/data/cluster/shard1/rs2.conf |

| Datafile Location | /backups/data/cluster/shard1/rs0/0/data | /backups/data/cluster/shard1/rs0/1/data | /backups/data/cluster/shard1/rs0/2/data |

| LogFile Location | /backups/data/cluster/shard1/rs0/0/log | /backups/data/cluster/shard1/rs0/1/log | /backups/data/cluster/shard1/rs0/2/log |

| Pid File Location | /backups/data/cluster/shard1/rs0/0/1.pid | /backups/data/cluster/shard1/rs0/1/2.pid | /backups/data/cluster/shard1/rs0/2/2.pid |

| ReplicaSet Name | rs0 | rs0 | rs0 |

This is all in a single server for the test pupose so the diagram looks like this.

Lets build the replicaset,

### Shard1 with Primary & Two Secondaries ###

mkdir -p /backups/data/cluster/shard1/rs0/0/logs

mkdir -p /backups/data/cluster/shard1/rs0/0/data

mkdir -p /backups/data/cluster/shard1/rs0/1/logs

mkdir -p /backups/data/cluster/shard1/rs0/1/data

mkdir -p /backups/data/cluster/shard1/rs0/2/logs

mkdir -p /backups/data/cluster/shard1/rs0/2/data

### Create Configuration file for node 1 , Note the port number & directory paths###

Note: Look at the configuration file marked red, the port number and replicaset name etc in order to make this instances part of a replicaset

vi /backups/data/cluster/shard1/rs0.conf

systemLog:

destination: file

path: "/backups/data/cluster/shard1/rs0/0/logs/rs0.log"

logAppend: true

processManagement:

pidFilePath: "/backups/data/cluster/shard1/rs0/0/shard1.pid"

fork: true

net:

bindIp: 127.0.0.1

port: 47018

storage:

engine: "mmapv1"

dbPath: "/backups/data/cluster/shard1/rs0/0/data"

directoryPerDB: true

operationProfiling:

mode: all

replication:

oplogSizeMB: 5120

replSetName: "rs0"

#### Create configuration file for node 2, Note the port number & directory paths ###

vi /backups/data/cluster/shard1/rs1.conf

systemLog:

destination: file

path: "/backups/data/cluster/shard1/rs0/1/logs/rs0.log"

logAppend: true

processManagement:

pidFilePath: "/backups/data/cluster/shard1/rs0/1/shard1.pid"

fork: true

net:

bindIp: 127.0.0.1

port: 47019

storage:

engine: "mmapav1"

dbPath: "/backups/data/cluster/shard1/rs0/1/data"

directoryPerDB: true

operationProfiling:

mode: all

replication:

oplogSizeMB: 5120

replSetName: "rs0"

#### Create configuration file for node 2, Note the port number & directory paths ###

vi /backups/data/cluster/shard1/rs2.conf

systemLog:

destination: file

path: "/backups/data/cluster/shard1/rs0/2/logs/rs0.log"

logAppend: true

processManagement:

pidFilePath: "/backups/data/cluster/shard1/rs0/2/shard1.pid"

fork: true

net:

bindIp: 127.0.0.1

port: 47020

storage:

engine: "mmapav1"

dbPath: "/backups/data/cluster/shard1/rs0/2/data"

directoryPerDB: true

operationProfiling:

mode: all

replication:

oplogSizeMB: 5120

replSetName: "rs0"

### Start the mongod instances

mongod -f /backups/data/cluster/shard1/rs0.conf

mongod -f /backups/data/cluster/shard1/rs1.conf

mongod -f /backups/data/cluster/shard1/rs2.conf

### Connect to first instances, Ensure give port number the first one is 47018 and the nodes to replicaset

mongo localhost:47018

mongo> rs.initiate() & rs.add(host:port) does not worked for members due to /etc/hosts issue. Hence used below way.

mongo> rs.initiate({_id:"rs0", members: [{"_id":1, "host":"localhost:47018"},{"_id":2, "host":"localhost:47019"},{"_id":3, "host":"localhost:47020"}]})

### Check the replicaset status

MongoDB rs0:SECONDARY> rs.status()

{

"set" : "rs0",

"date" : ISODate("2016-11-22T23:31:42.863Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 1,

"name" : "localhost:47018",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 537,

"optime" : {

"ts" : Timestamp(1479857491, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-11-22T23:31:31Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1479857490, 1),

"electionDate" : ISODate("2016-11-22T23:31:30Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 2,

"name" : "localhost:47019",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23,

"optime" : {

"ts" : Timestamp(1479857491, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-11-22T23:31:31Z"),

"lastHeartbeat" : ISODate("2016-11-22T23:31:42.712Z"),

"lastHeartbeatRecv" : ISODate("2016-11-22T23:31:42.477Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "localhost:47018",

"configVersion" : 1

},

{

"_id" : 3,

"name" : "localhost:47020",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 23,

"optime" : {

"ts" : Timestamp(1479857491, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2016-11-22T23:31:31Z"),

"lastHeartbeat" : ISODate("2016-11-22T23:31:42.712Z"),

"lastHeartbeatRecv" : ISODate("2016-11-22T23:31:42.476Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "localhost:47018",

"configVersion" : 1

}

],

"ok" : 1

}

Note Above red marked : The instance which running on port 47018 is acting as primary and instances running on port 47019 and 47020 as secondaries.

Now our replicaset in a single node is ready with one primary and two secondaries.

Next Post: Converting Replicaset to a sharded server in Standalone Server

-Thanks

Geek DBA

Follow Me!!!