In Previous post here and here we have seen deleting and adding back the deleted node to Cluster.

In this post we will see how to add a new node in the cluster

Environment, Assuming you have a new server with OS (OEL) installed and,

Phase 1. You have to install or clone the GRID Home, for this you can either choose GUI to install GI Binaries, or Clone the existing home, we will the later approach

Phase 2. You have to add this new node to cluster

Read on,

Phase 1 : Clone the Grid Home

1. Assuming that GI is installed on the source stop the GI using the following command.

[root@rac2 bin]# ./crsctl stop crs

2. Create a stage directory and a tar ball of the source

[root@rac2 grid]# mkdir /u01/stageGI

[root@rac2 grid]# cp -prf /u01/app/11.2.0.3/grid /u01/stageGI

[root@rac2 grid]# pwd

/u01/stageGI/grid

[root@rac2 grid]#

[root@rac2 grid]# tar -cvf /tmp/tar11203.tar .

[root@rac2 grid]#

3. Start GI on the source node

[root@rac2 bin]# ./crsctl start crs

4. Create a software location on the new node rac3 and extract the tar ball. As root execute on the new node rac4

mkdir –p /u01/app/11.2.0.3/grid

mkdir –p /u01/app/grid

mkdir –p /u01/app/oracle

chown grid:oinstall /u01/app/11.2.0.3/grid

chown grid:oinstall /u01/app/grid

chown oracle:oinstall /u01/app/oracle

chown –R grid:oinstall /u01

mkdir –p /u01/app/oracle

chmod –R 775 /u01/

As grid user execute on the new node rac3

cd /u01/app/11.2.0.3/grid

[grid@rac3 grid]$ tar -xvf /tmp/tar11203.tar

5. Clean the node specific configuration details and set proper permissions and ownership. As root execute the following

cd /u01/app/11.2.0.3/grid

rm -rf rac2

rm -rf log/rac2

rm -rf gpnp/rac2

rm -rf crs/init

rm -rf cdata

rm -rf crf

find gpnp -type f -exec rm -f {} \;

rm -rf network/admin/*.ora

find . -name ‘*.ouibak’ -exec rm {} \;

find . -name ‘*.ouibak.1′ -exec rm {} \;

rm -rf root.sh*

cd cfgtoollogs

find . -type f -exec rm -f {} \;

chown -R grid:oinstall /u01/app/11.2.0.3/grid

chown –R grid:oinstall /u01

chmod –R 775 /u01/

As grid user execute

[grid@rac3 cfgtoollogs]$ chmod u+s /u01/app/11.2.0.3/grid/bin/oracle

[grid@rac3 cfgtoollogs]$ chmod g+s /u01/app/11.2.0.3/grid/bin/oracle

6. Verify the Peer Compatibility and node reach ability, You can see the log here and here (uploaded to ensure the post looks small)

7. Run clone.pl from $GI_HOME/clone/bin on the new node rac3 as grid user, Before running clone.pl prepare the following information:

· ORACLE_BASE=

/u01/app/grid· ORACLE_HOME=

/u01/app/11.2.0.3/grid· ORACLE_HOME_NAME=

Ora11g_gridinfrahome2 – use OUI from any existing cluster node

Run Clone.pl

[grid@rac3 bin]$ perl clone.pl ORACLE_HOME=/u01/app/11.2.0.3/grid ORACLE_HOME_NAME=Ora11g_gridinfrahome2 ORACLE_BASE=/u01/app/grid SHOW_ROOTSH_CONFIRMATION=false

Note: Basically what clone.pl does is its relink the binaries to the new nodes, as we already copied the whole set of oraclehome from other node.

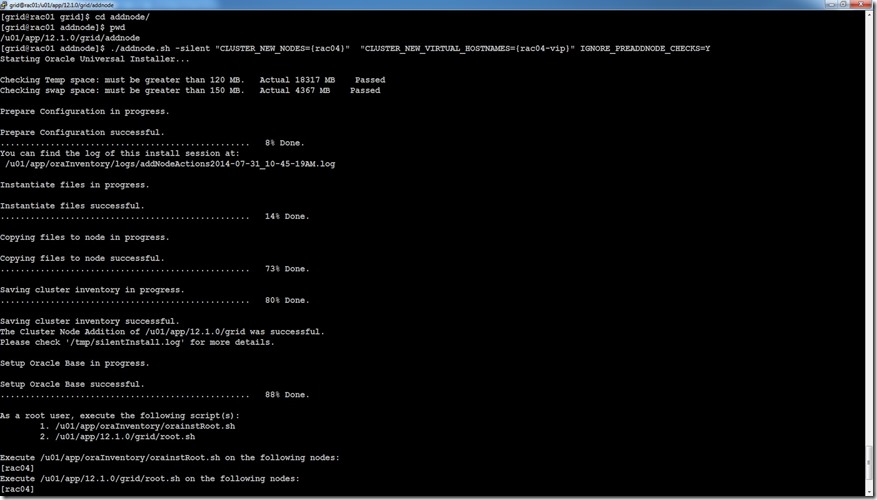

Phase 2 : Add the node to cluster

Run addnode.sh from any of the node

[grid@rac2 bin]$ ./addNode.sh -silent -noCopy ORACLE_HOME=/u01/app/11.2.0.3/grid “CLUSTER_NEW_NODES={rac4}” “CLUSTER_NEW_VIRTUAL_HOSTNAMES={rac4-vip}” “CLUSTER_NEW_VIPS={rac4-vip}” CRS_ADDNODE=true CRS_DHCP_ENABLED=false

Note: Here you need not to specify VIP if use GNS

Error1:- Node vip is already exists, we have given VIP which is already configured for scan here the screeshot for the same.

Corrected the entries in host file and then ran again

Corrected the entries in host file and then ran again

Do not run root.sh once it completed,

Copy the gpnp etc from the node you have ran the addnode.sh

[grid@rac2 install]$ scp :/u01/app/11.2.0.3/grid/crs/install/crsconfig_params rac3:/u01/app/11.2.0.3/grid/crs/install/crsconfig_params

[grid@rac2 install]$ scp :/u01/app/11.2.0.3/grid/crs/install/crsconfig_addparams rac3:/u01/app/11.2.0.3/grid/crs/install/crsconfig_addparams

[grid@rac2 install]$

Copy the content of /u01/app/11.2.0.3/grid/gpnp on any existing cluster node, for example rac2 to /u01/app/11.2.0.3/grid/gpnp on rac3.

Finally Run the root.sh

[root@rac3 grid]# ./root.sh

Logfile

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/12.1.0/grid

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/12.1.0/grid/crs/install/crsconfig_params

2014/07/31 11:04:23 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector.

[0m

2014/07/31 11:04:51 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

[0m

OLR initialization - successful

2014/07/31 11:05:45 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.conf'

[0m

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac04'

CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rac04'

CRS-2677: Stop of 'ora.drivers.acfs' on 'rac04' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac04' has completed

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Starting Oracle High Availability Services-managed resources

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac04'

CRS-2672: Attempting to start 'ora.evmd' on 'rac04'

CRS-2676: Start of 'ora.evmd' on 'rac04' succeeded

CRS-2676: Start of 'ora.mdnsd' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac04'

CRS-2676: Start of 'ora.gpnpd' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'rac04'

CRS-2676: Start of 'ora.gipcd' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac04'

CRS-2676: Start of 'ora.cssdmonitor' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac04'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac04'

CRS-2676: Start of 'ora.diskmon' on 'rac04' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac04'

CRS-2672: Attempting to start 'ora.ctssd' on 'rac04'

CRS-2676: Start of 'ora.ctssd' on 'rac04' succeeded

CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'rac04'

CRS-2676: Start of 'ora.asm' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.storage' on 'rac04'

CRS-2676: Start of 'ora.storage' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.crf' on 'rac04'

CRS-2676: Start of 'ora.crf' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'rac04'

CRS-2676: Start of 'ora.crsd' on 'rac04' succeeded

CRS-6017: Processing resource auto-start for servers: rac04

CRS-2672: Attempting to start 'ora.net1.network' on 'rac04'

CRS-2676: Start of 'ora.net1.network' on 'rac04' succeeded

CRS-2672: Attempting to start 'ora.ons' on 'rac04'

CRS-2676: Start of 'ora.ons' on 'rac04' succeeded

CRS-6016: Resource auto-start has completed for server rac04

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2014/07/31 11:09:20 CLSRSC-343: Successfully started Oracle Clusterware stack

[0m

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 12c Release 1.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

[1m2014/07/31 11:09:44 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[0m

[0m

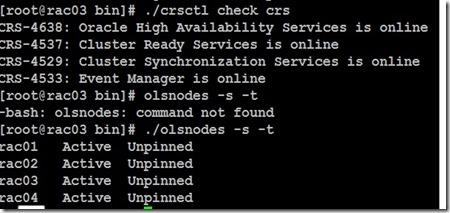

verify OLS Nodes

Follow Me!!!