Before going to the topic, consider this example

An enterprise request system where utilized by the IT employees across the globe and raise requests for their needs like Desktop issues, telephone issues, request for software, request for databases, request for procurement etc. On and average daily 5000+ requests across as I know this system exists one of my previous employers.

After a period of time, the data in this tables become older and not that critical except to understand the trend of requests or understand SLA etc. So the cost of storage will be ever increasing and in demand as you cannot say I don't want this data any more. And that say's when you want you should be able to retrieve the data so it should reside in the database forever.

To manage this huge volume of the data, the design is something to do partitioning at tables or manage the older partitions and move the data to different archival tables and compress them.

Basically there are two flaws with this.

- Though you have partitioning methods in use, the optimizer has to do lot of work to get you the latest data for analysis, like pruning and all, coz the older data still be accessible and need to analyze by optimizer

- The second is the cost, though you moved the partitions to different tables or tablespaces the cost is still same coz you are using same stoage tier (tiering of storage is something associated with speed that comes with cost)

Both of the above were also manual way of managing and operational overhead includes.

So you need

- Compression of older data that is not in usage

- Storage cost reduction by moving to lower storage tier

- Performance benefit

- Fully automatic procedures to reduce operational maintenance

So how about a concept of introducing the optimizing your data that is not used say about 3 months ago and move to different and low storage tier and also gain the performance benefit and that too a native database tool which do this checks automatically and moves your data and mark cold and compress them too.

There you go, an introduction to "Automatic data optimization" a new feature in 12c which exactly address above. This is part of advanced compression feature and licensed one. Seriously I would love to use this feature to the system for the above I mentioned.

Automatic data optimization a part of Oracle Information Life cycle comes with two components called Heat Map and ADO.

- Heat Map which tracks and marks data even down to the row and block level as it goes through life cycle of data changes.

- ADO or Automatic Data Optimization works with the Heat Map feature and allows us to create policies at the tables, object and even row level which specify conditions to dictate when data will be moved or compressed based on statistics related to the data usage using scheduler jobs.

Lets take a closer look at Segment Compressions or Data optimization techniques that Oracle adapts so far. (12c Has new names of older compression technologies)

| Until 11g | In 12c | Explanation |

| OLTP Compression | Advanced Row Compression | Compress at row level |

| Secure File Compression | Advanced Lob Compression | Lob compression |

| Secure File Deduplication | Advanced Lob Deduplication | File level compression |

| Heat Map (object & row level) | Tracks the access of data at row or segment level | |

| Automatic Data Optimization | Collection of policies and scheduler jobs to move and compress the data based on the heat map tracked data | |

| Temporal Optimization | Optimizing the data for temporary basis temporal validity | |

| Hybrid Columnar Compression | Hybrid columnar compression | Compress the data at column level finally |

HeatMap:-

Heatmap with in the database when enabled (instance level parameter heatmap=on) it tracks the data usage at segment and row level. It will basically looks at following.

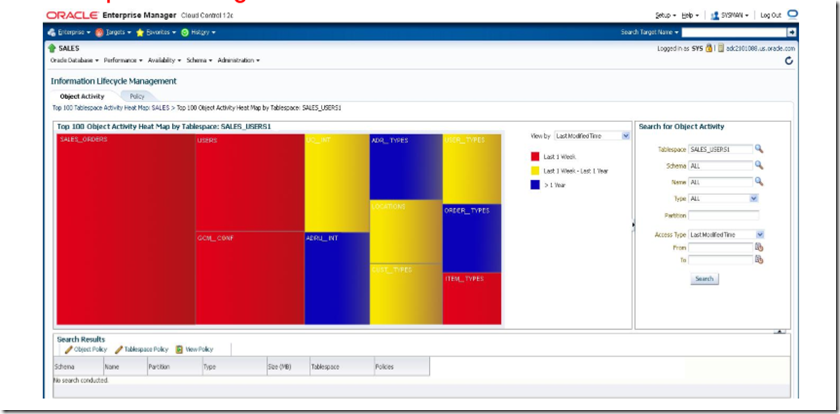

Heatmap tracks the data based on Active, Frequent Access, Occassional Access, Dormant and mark the row/segment/block appropriately. This is how the enterprise manager heatmap screen looks like.

Notes about heatmap:-

- Database level Heat Map shows which tables and partitions are being used

- Block level Heat Map shows last modification at the block level

- Segment level shows both reads and writes

- Distinguishes index lookups from full scans

- Automatically excludes stats gathering, DDLs or table redefinitions

- Cost & Performance Object level at no cost , Block level < 5% cost

ADO (Automatic Data Optimization)

ADO is a policy based technique which can in turn to a declarative SQL statement condition and then action either compression or storage tiering. Once the condition met there are new jobs in dbms_scheduler which the action either compressing or moving will be invoked. For example

You want a table service_request where,

- All the data that should be row level compression older than one week if not used

- All the data that should be advanced compression older than a month if not used

- All the data that should be moved to lower tier storage older than three months if not used

- All the data that should be again compressed for archive (column level compression) older than a year if not used

So lets look at it, how and what you have to do (assuming you have heatmap enabled on )

| Table data if not used | HeatMap Matrix | Technique use | ADO Command | Compress % |

| Older than a week | Active | Advanced Row Compression | alter table service_request add policy compress for advanced row after 7 days of no update; | 2-4X |

| Older than a month | Frequent Access | Advanced query compression | alter table service_request add policy compress for query low after 30 days of no update; | 10X |

| Older than three months | Occasional Access | Advanced Columnar Compression | ALTER TABLE service_request MODIFY PARTITION 2013_06_Req ILM ADD POLICY TIER TO Tier2_TBS; Note:-Tier2_TBS is a new tablespace created on low cost storage |

10X |

| Older than a year | Dormant | Advanced Columnar Compression | alter table service_request add policy compress for archive after 365 days of no update; | 15-50X |

You can also use other policies like compress basic segment after 3 months (basic compression no license required), Note the Hybrid columnar compression works only with exadata.

Once the policy is enabled for database/segment/tablespace etc the jobs will be run their schedule time.

Important views for ILM:-

- DBA_ILMPOLICIES – What are all policies and the status

- DBA_ILMDATAMOVEMENTPOLICIES – Storage movement policies enabled

- DBA_ILMEVALUATIONDETAILS – Prediction and evaluation details

- DBA_ILMPARAMETERS – Parameters for ILM

- DBA_ILMRESULTS – Results after the action taken

- DBA_ILMTASKS – About Jobs

Next Post:- Practice on Heatmap & ADO

[…] 12c Database : Automatic Data Optimization (Compression and Optimization) […]